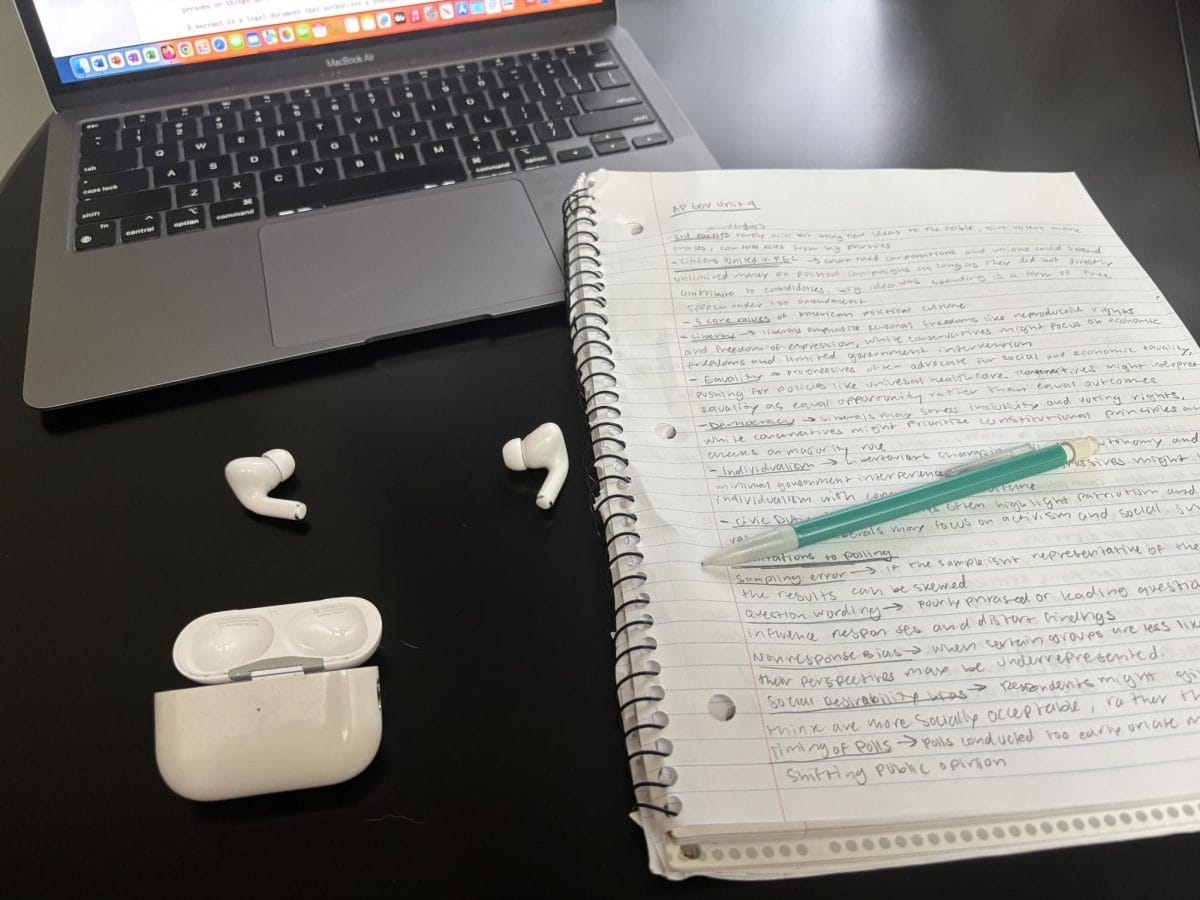

Students across the country are using artificial intelligence software like ChatGPT to enhance their writing or to simply write for them. In recent years, there has been a massive leap in just how advanced this technology is getting, and teachers are racing to find a way to determine students’ academic honesty.

The new AI wave has left teachers searching for a way to make sure their students are still retaining the information they are teaching in the classroom.

“I spent time researching ChatGPT and redesigned the way I gave my assignments,” said English teacher Jeffery Klein.

For some teachers, it is extremely obvious when a student submits work that is not their own, and when they do, it is detrimental to the student’s academic reputation.

The general reaction to finding signs of AI assistance is the student receiving a 0 for the assignment, but to many teachers, it means much more than just a number. Teachers lose trust in their students and bonds can be permanently broken over a single assignment.

After talking to multiple English teachers at our school, the general attitude towards ChatGPT is that if students feel the need to lie about their academic capabilities, then they should not submit any work at all.

Using an AI can be much faster than actually doing an assignment, but it is debatable whether it is really beneficial to students. Teachers of the classes where ChatGPT can be most applied, English and History classes especially, fear younger learners using the software are taking away the opportunity to master basic writing tools and skills taught in class when they need to, which may come back to hurt them later when they need to demonstrate their abilities later on.

This view, however, is much different than older students that don’t have interest in practicing these skills any more. When it comes to difficult assignments, students will often take the path of least resistance.

“A lot of students are lazy. They will often find the easiest way to do work,” junior Mason Yuh said.

Some teachers are choosing to fight fire with fire. A new technology called GPTZero is capable of recognizing a writer’s identity by finding patterns of word choice, grammar and style. GPT0 is being used to detect students trying to submit writing that is unlike their usual tone, and alerts the teacher that AI may be in play.

Mira Murati, the chief technology officer at OpenAI, understands the risks of having an AI in the classroom, but thinks that schools should wait a bit longer to give the technology a chance to demonstrate the possible benefits. Open AI is the program behind the creation of ChatGPT, and has recently received attention from the project.

“It has the potential to revolutionize the way we learn,” Murati said.

Some districts are complying to see if the AI will really help learning, but many districts across the country, including New York City Public Schools, have already banned the technology.

Opinions from those outside school systems are fairly mixed. AI is certainly smart enough to enrich the classroom, yet AI critics are saying ChatGPT lacks the ability to truly understand the complexity of human languages and conversation.

Regardless of opinions on ChatGPT, teachers will now need to try a bit harder to catch students abusing AI’s resources. OpenAI recently released an upgraded version of the GPT on March 14th, and it is supposedly the most advanced design yet. “GTP-4” has the ability to generate images, create more human responses and produce computer code from almost any prompt.

The role of AI in education is still up in the air, and students will continue to use the technology until more rules are instituted regarding its boundaries. Teachers need to decide if ChatGPT is something they can utilize or if the advancement of technology is detrimental to education. A choice will have to be made in the near future, since AI is only getting smarter.